Let’s face it: SEO is a complicated and challenging universe. From keywords to backlinks, there are endless technical issues to consider, for example, how search engines crawl and index sites.

Noindex, nofollow, and disallow directives can help manage these SEO aspects, but it’s crucial to understand the difference between them and how to use them effectively.

In this article, you will learn how to use each directive, set it up, and when to use it. Along the way, we’ll share real-world examples of how these directives impact SEO performance so that you can see the theory in action.

You’ll learn to use noindex, nofollow, and disallow directives to avoid critical mistakes that can ruin your clients’ search visibility. So buckle up, grab a coffee, and let’s dive in!

What is Noindex?

Definition and Function

Noindex is a meta tag that prevents search engines from indexing specific website pages. It means the pages won’t appear in search engine results pages (SERPs).

However, they will be scanned, and Google will know about their existence and content.

There are several ways to profit from the noindex directive:

- Prevent search engines from indexing pages that contain duplicate content, such as product pages with identical descriptions but different URLs.

- To keep low-quality pages from appearing in search results and potentially harming a website’s overall SEO performance.

- When a website is under construction, it’s a good idea to use noindex to prevent search engines from indexing unfinished or incomplete pages.

How to set up a noindex directive?

You can use HTTP headers or meta robots tags to set up noindex on a web page. HTTP headers are used to communicate with the server and can be set up primarily by developers.

And meta robots tags are inserted into the HTML code of individual pages and can be added by content creators or website owners.

Setting up noindex correctly is essential, as mistakes can seriously affect a website’s search visibility. For example, accidentally applying the noindex directive to an entire website can remove all its pages from search results.

Using noindex strategically, website owners and marketers can ensure that their websites only display high-quality content to search engine users.

What is Disallow?

Definition and Function

Disallow is another effective tool for managing SEO performance that tells search engine crawlers not to access and crawl specific pages or sections of a website.

To learn how to manipulate it, you need to know robots.txt. In short, it is a text document located in the root directory of a website that tells search engines which pages or sections of the site they should or should not crawl and index.

How to Use Disallow in Robots.txt?

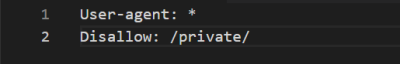

To use disallow in robots.txt, you will need to identify the URLs you want to exclude from crawling and add them to the .txt file. For example, if you want to exclude a folder called “private” on your clients’ website, you would add this line to robots.txt file:

In this example, “User-agent: *” specifies that the rule applies to all web crawlers, and “Disallow: /private/” tells the crawlers not to crawl any URLs that contain “/private/” in the path.

NOTE! Using disallow in robots.txt does not guarantee that search engines won’t index the excluded pages. While most search engines will respect the directive, there is no guarantee that all of them will not index the excluded pages if they find links to your clients’ pages from other pages of site or external websites.

Therefore, we can conclude that the noindex directive will be more effective in removing the page of your clients’ site from the index.

When to use disallow?

You can use disallow when you want to block search engines from crawling specific parts of your clients’ website. For example, to block search engines from indexing low-quality pages, such as thank-you pages, login pages, or pages with thin content.

What is Nofollow?

Definition and Function

Nofollow is a link attribute that indicates the link should not pass on any ranking credit or authority to the destination URL, even if users click on it.

Using nofollow can be helpful in a few ways:

- Preventing the flow of link equity to low-quality or untrusted websites

- Reducing the risk of being penalized by search engines for participating in link schemes or buying links

- Avoiding the dilution of a website’s link juice by controlling the number of outgoing links from a page

Nofollow is the attribute that causes a lot of confusion among users. The key devilish detail is that nofollow doesn’t prevent search engines from indexing the linked page.

Some webmasters believe that nofollow can be used to remove pages from search results, but they are wrong.

It does not affect whether the page is indexed or not.

To learn how to master nofollow and avoid common mistakes, check out our comprehensive guide: “How to Use Nofollow Links Effectively in Your Link Building Strategy.”

You will know when to use the nofollow tag, the impact of nofollow links on rankings, and the importance of achieving a natural link profile.

The Problem with Using Disallow and Noindex Together

As we already know, disallow and noindex can be practical tools for managing search engine crawling and indexing. However, combining these two directives can create problems for SEO that website owners should be aware of.

Disallow is a directive used in robots.txt files to instruct search engine bots not to crawl (scan) certain pages or directories on a website.

However, the page can get into the search engine index if someone else links to it through external links or you have it in the sitemap.

On the other hand, noindex prevents the page from indexing, which means it cannot appear in a Google search. But, the page will be scanned, and Google will know its existence and content.

While these two directives may seem similar, they serve different purposes.

Let’s say your client have a website with a page containing low-quality content. You decide to use the noindex tag to prevent search engines from indexing the page, but you also want to ensure that the page is not crawled.

So you add the disallow directive for that page in the robots.txt file.

However, using noindex and disallow together can create confusion for search engines. The noindex tag tells search engines not to index the page, but the disallow directive tells them not to crawl it.

If search engines can’t crawl the page, they won’t see the noindex tag and may assume that the page should be indexed.

It can lead to a situation where the page gets indexed, even though you intended to prevent that from happening.

In addition, using disallow and noindex together can make it more difficult for search engines to understand your clients’ website structure and crawl it efficiently.

Therefore, using either noindex or disallow to achieve your desired result is generally recommended rather than using both together.

Best Practices for Using Noindex, Nofollow, and Disallow

Undoubtedly, noindex, nofollow, and disallow are handy tools to manage crawling and indexing of your website. Using them strategically can improve your clients’ website SEO performance and avoid penalties from search engines.

Here are some best practices for using these tools effectively:

- Test changes carefully: Before implementing any changes to your clients’ robots.txt file or meta tags, test them thoroughly in a staging environment to ensure they don’t cause unintended consequences.

- Monitor your clients’ website crawl and index status regularly: Keep an eye on your website’s crawl and index status using tools like Google Search Console and WebCEO. It will help you identify any crawl errors, indexing issues, or penalties from search engines so you address them promptly.

- Use noindex and nofollow sparingly: While these tags can be helpful in certain situations, overusing them can harm your clients’ website SEO performance. Use them only when necessary to prevent search engines from crawling or indexing certain pages or links.

- Consider using disallow instead of noindex: If you want to prevent search engines from crawling a page, consider using the disallow directive in your clients’ robots.txt file instead of noindex. It will prevent search engines from accessing the page altogether rather than just preventing it from appearing in search results.

In addition to these best practices, you can use the WebCEO Backlink Checker, which comprehensively analyzes your clients’ backlink profile, including metrics like domain authority, page authority, and spam score.

With this tool, you can quickly identify low-quality or spammy links and take steps to remove them while also building high-quality backlinks to improve your clients’ website search engine rankings.

CONCLUSION

Understanding and implementing the noindex, nofollow, and disallow are crucial for achieving SEO success.

By properly using these directives, you can improve your clients’ website crawling and indexing, prevent the flow of link equity to low-quality or untrusted websites, and avoid being penalized by search engines.

Remember, SEO is an ongoing process, and it’s important to continually optimize your clients’ website to achieve the best possible results.