If you don’t have enough knowledge of the SEO field to spot potential or existing issues with a single glance, you’ll have a lot of work ahead of you. But don’t worry, it won’t hurt.

We will teach you how to execute a high-quality technical SEO audit of your projects, so you can be highly satisfied with the results and not break your head in the process.

What is a technical SEO audit of a website?

A technical SEO audit is one of the most important procedures for any webmaster or SEOer. Inherently, the process represents a search for technical issues, addressing them and preventing their return.

Technical issues are alarming because they damage the balanced work of a website and, most of the time, ruin the user experience. Both reasons are a cogent signal to Google that your website isn’t a great place to welcome visitors.

When to perform a technical audit of a website?

To cut a long story short, you should always do it. It doesn’t matter whether you prefer to check everything manually or involve SEO tools. You are expected to know the technical condition of your projects inside and out at any minute.

As an option to streamline your workflow, you can use report generating SEO software to get alarmed about any issues in real-time. If your time is absorbed in multiple projects, you can set PDF or CSV reports for multiple projects to be delivered once in a specific period of time. The WebCEO platform provides such solutions.

The first distress call would be your rankings. We would recommend you consider full-fledged on-page and off-page SEO audits on a regular basis.

We have asked our clients, experienced SEO specialists, to share their opinion about the frequency of such audits:

Bronson Harrington, SEO Wushu: “We normally do audits at the end of every sprint cycle, to make sure that we stay on top of things. Then the report results are built into the next sprint. This process gets rinsed and repeated.”

Martin Suttill, 54 Solutions: “We usually scan once or twice a month, any more than that you don’t see any real change where you can take actions that will make a difference.”

Emiliano Elías, ADBOT Digital Marketing Agency: “We have all our reports run twice a month to have a good sense of what is happening. We have the reports set to run on the 7th and 21st of every month because everyone else runs on the 1st and 15th of every month and sometimes you get in a long queue before your reports start to run as scheduled.”

What to consider during the technical audit of a website?

We have finally come to the most important information. Fasten your seatbelts, we are starting the journey!

STEP 1: Check out a website’s current well-being

You should primarily know what’s the real condition of the projects you are starting to work on. Be it a brand new website or a website that other specialists had been optimizing before you came, you have to know each detail before starting your work.

I hope we all don’t indulge in illusions and understand that we can’t properly analyze any website without tools that will give you the necessary data in a matter of minutes. Well, there is a chance to wait longer depending on two circumstances: the capability of the tool provider that you have chosen and the size of the website.

First, let’s start with a set of Google tools.

Relatively speaking, Google tools are mandatory to use whether you like it or not. A nice bonus is that they are free.

Google Search Console helps any webmaster see information on the presentation of a project on the SERPs and errors in a website’s layout and its pages. This is the only place where you can see whether a website was “punished” with a manual action.

Google Analytics helps a webmaster (and other specialists on the team) analyze visitors and their behavior on a website. These are the two major ones. However, you will definitely make frequent use of Keyword Planner, Google PageSpeed Insights, Google Mobile-Friendly Test Tool, and other tools we will talk about in this article.

You can use the Google Services interface of WebCEO where you can logon to all in one place (Google makes you login separately to the various services). I should mention some conveniences of using them before a newcomer decides to jump over this part.

- WebCEO gets data from the various Google tools and lets you work within one domain instead of jumping from one service to another, because Google, unfortunately, doesn’t have everything in one place;

- WebCEO works with the data to present users with unique reports that can significantly help during the optimization.

- They cooperate with other platforms and use their databases (such as backlink databases or keyword databases) to enrich reports and present in-depth data.

WebCEO will help you cope with each stage of analysis, optimization, and results monitoring. It’s vitally important to have a reliable helper when you perform a new task.

All you need is to add a new project that you want to optimize, and after a short while you will get a lot of information regarding different aspects of website performance: keyword optimization, off- and on-page optimization, SEO and technical analysis, and a lot more.

Today we will focus on the technical side of website performance and, without further ado, let’s dive in!

STEP 2: Check if your project meets all the necessary technical requirements

A project’s technical condition is another important reason that can depress your rankings and, cause damage to the user experience. One of the biggest challenges is identifying where your technical optimization went wrong.

You will have to check even some basic points to make sure you (or another specialist) didn’t make any mistakes at the beginning.

- Crawling and Indexing

You will have to go to your client’s Google Search Console account to check whether Google had problems crawling and indexing a website. The “Coverage” report will say if you have any of them and show the details. Maybe the root of all evil started there.

1. Go deeper into crawling and indexing.

Two basic operations Google does with any website: scans your contents (crawl) and adds a website to its database (index). There are situations when you would like a website to avoid crawling and indexing. You may have plenty of reasons for these: underdeveloped pages, shopping cart checkouts, advertising pages that compete with the content you already have on the website, whatever.

There are two ways to keep everything private: a robots.txt file and a robots meta tag.

If you don’t want some pages to be crawled by google bots, you can create a robots.txt file with URLs you want to “hide” from Google and place it at the root of a site.

Remember that robots.txt saves your URLs against crawling but not indexing. If someone leaves a link with anchor text to one of the pages you have included in the robots.txt file it will be seen by Google. The link can even appear in the SERPs but it will have no metadata.

Read what Google says about the robots.txt and do it right.

If you want to prevent your client’s website’s pages from being shown in the SERPs, you should work with a robots meta tag. If your desire is to give Google the red light to index your content, you will use a noindex meta tag in the head of the HTML code of your page. Thus, your page will be crawled as it has to be but won’t appear in search results.

A mistake can arise if you want to have it both ways: no crawling, no indexing. Things don’t work like that. Choose between the two options and use the best variant for you. If you want to apply both robots.txt and noindex – it won’t work out. The noindex meta tag is seen when google bots crawl your website, but if your page is on the list of robots.txt they will not see your “noindex wish”. Thus, Google will not crawl your page but index it.

Another blooper may be seen if you want to save non-HTML elements like images, videos, or PDF files from indexing. A usual robot meta tag will not help if you decide to use it. Instead, take an x-robots-meta tag and insert it into your HTTP header on a server. This will prevent your files from being indexed.

Look carefully at what Google proposes to do with Robots and X-robots meta tags and apply them successfully.

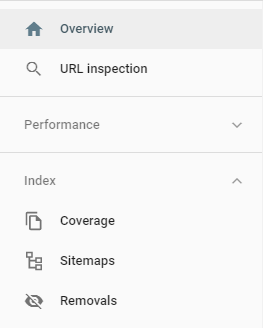

2. Check your code and index status in Google Search Console

Sections we will need:

With the help of the URL Inspection Tool, you can get information on the indexed and live pages of a website. You’ll see why its condition doesn’t meet your expectations. The data presented there: information on indexing, discovery and crawling, structured data, mobile usability, and AMP.

If the URL Inspection Tool shows that you have some issues, start fixing them.

Before asking Google to reindex the page, you can check the results of your work with the Test live URL option. It serves to make sure everything is working fine and deserves to be reindexed after your changes. Click on it and you will have renewed data about the page. If there are still some issues, fix them too and check again.

If you have some time, I highly recommend you watch this Google Search Console video and check out the whole series to understand it better.

To see a full list of existing issues, set your eye on the Coverage report. There you will see the same information as in the URL Inspection Tool reports, but for all the pages. That’s pretty comfortable when you want to see the general wellbeing (or not) of a project.

3. Get sure your Manual Actions report is okay

A manual action is addressed to a website when it works against Google Webmaster Guidelines. Google regularly updates its algorithm and adds new “behavior rules” for different types of websites. You have to go along with them to keep your rankings high.

If you have received a manual action, check if it happened after another algorithm update rollout and assess your compliance with the new rules. Fix issues and request a review to see if you worked on everything well.

4. Check whether your HTML code helps to directed international traffic

You would like your potential clients to understand what a website offers even if they don’t comprehend English. Especially if your business operates on the international market.

Important! If you haven’t thought about extending your business, check if you already need this. Analyze your existing customers in your private database and Google Analytics: what country they are from, what language they speak, and how they use your products or services in their home environment.

If you already have a website translated into different languages, this part is for you.

I’ll bet you would like the right pages to be shown in front of the right audience, right? If you aren’t sure if this condition is met, it’s high time to get down to business.

Everything is executed within the bounds of the HTML code of the website.

- Tell search engines what pages to show in specific locations.

An hreflang tag explains to search engines which variant of the page (which language) to show in specific locations. This is applied even to pricing pages in different currencies. For instance, if you want to show a page in German to people with an IP address in Germany without them having to choose a language on their own, you can do this “wish” in the form of a code.

Like this:

<link rel=”alternate” hreflang=”es” href=”http://www.es.hello.com/” />

<link rel=”alternate” hreflang=”de” href=”http://www.de.hello.com/” />

An hreflang tag can also be used to target people whose browsers are set to a specific language in regions that don’t correspond to this language. For example, if you want an English version of a page to be shown to English speaking users from Israel, you can do this by simply adding a location to the code:

<link rel=”alternate” hreflang=”en-il” href=”http://www.en-il.example.com/” />

First, we put a language code according to ISO-639-1 format, then we have a location code according to ISO-3166-1 format.

Google has all the necessary information about hreflang tags.

- Indicate what page runs the show.

If you have hreflang tags for other language versions of your pages, don’t fail to set a self-referencing hreflang tag to show Google which of the pages is the main one. It’s like setting a canonical tag to show google bots which page among several of them is the main one and should be indexed and shown in search results.

For example, <link rel=”alternate” hreflang=”en” href=”https://www.hello.com/” />

A self-referencing hreflang tag works a little bit differently. It shows Google what page is the main one but does not prohibit Google from indexing other versions. Such a tag is a good practice, and you definitely should place it in your page code.

Google Search Console’s International Targeting Report will keep information on the languages and countries you are targeting.

Multilanguage websites have other aspects to consider:

If you decide to go for a multi-language interface, you should think about local domains for each of the languages you are going to translate your content into.

Create a new website under a new local-focused domain. All you have to do is to add a redirect to a new website that will be activated as soon as a user clicks on the color/language icon. Your main domain will be hello.com, and a local-focused one will be: hello.uk, or hello.au, or hello.de.

- Point out what page to show if all variants are wrong.

If you have multiple versions of the website for different countries or languages, you should use the hreflang tag. However, what should you do if your range of languages doesn’t comply with the browser settings of a user? There is a solution for such situations from Google: the x-default tag.

This tag is recommended to be added to the homepage code. It should be a page where a user can choose a language on his or her own:

<link rel=”alternate” href=”http://www.hello.com/” hreflang=”x-default” />

- Check if your traffic is really directed to the original pages instead of duplicates

A canonical tag will help. It is used to indicate a “chief” version of one of your pages. You may ask: are there any non-chief versions of the page? The answer is yes. For example,

https://www.yoursite.com/

https://yoursite.com/

are perceived as different pages by search engines. This also goes to pages with HTTP and HTTPS protocols.

You may not notice it but the same exists when you have your blog posts under several categories. When you assign one article to several categories you create two different URLs for it. For example:

https://yoursite.com/blog/one-category/what-is-a-canonical-tag

https://yoursite.com/blog/another-category/what-is-a-canonical-tag

These can be seen as two different pages for search engines.

A canonical tag exists to direct traffic to that one page you want to be the true one and avoid duplicate pages. When a user types a query, a search engine will see all the variations of the page’s URLs and if the canonical tag is not in the code, it will choose a page by itself.

According to John Mueller, Google pays attention to two points when it comes to choosing one website among several alike: the site’s preference and the user’s preference.

How to point out your preferences?

- set a canonical tag for a page you want to be shown for relevant queries,

- set a 301 redirect where it is needed;

- interlink pages within the website properly. When you interlink pages with duplicates for whatever reason, use the URL that is in your sitemap.

Notice that Google gives its preference to HTTPS URLs and their nice structure.

Set a canonical tag in the head part of the HTML code of a page that you want to be the chief.

For example,

<link rel=”canonical” href=”https://www.hello.com/” />

If you want Google not to see duplicates in non-HTML documents, like PDF files, use a rel=”canonical” HTTP tag to identify the link you want to be indexed.

N/B: You should not put a canonical tag and an hreflang tag in the same line. They should go by turns in the HTML code of the page.

5. See if the website is fast enough for your potential users

Page speed is one of the most important technical aspects to deal with. According to Google, page speed drastically influences your rankings. If a page loads in more than one second, the probability of a person leaving this page gets bigger with each half second. This leads to other consequences such as a high bounce rate that Google doesn’t like.

Analyze your page speed in Google PageSpeed Insights to see if your performance is not good enough to show the website in the top search results. Go through a given list of the points which influence your page speed score and optimize your content.

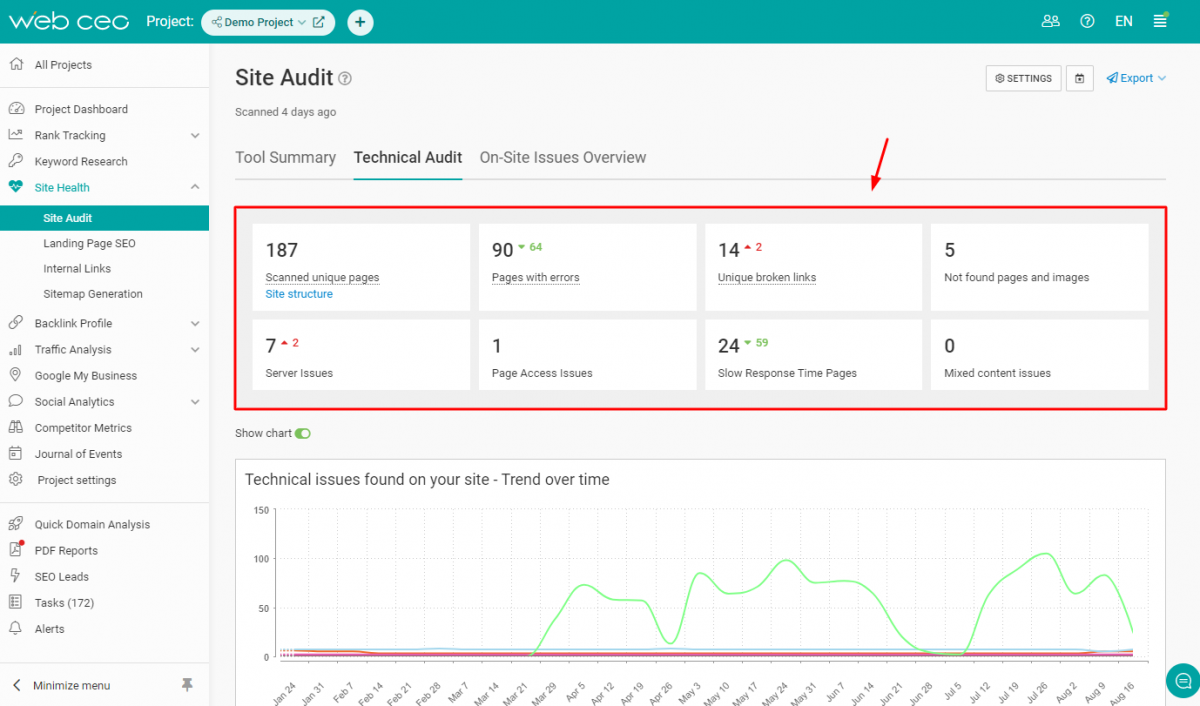

Use the WebCEO Site Audit Tool to get this data with valuable advice on how to fix issues if the system identifies them.

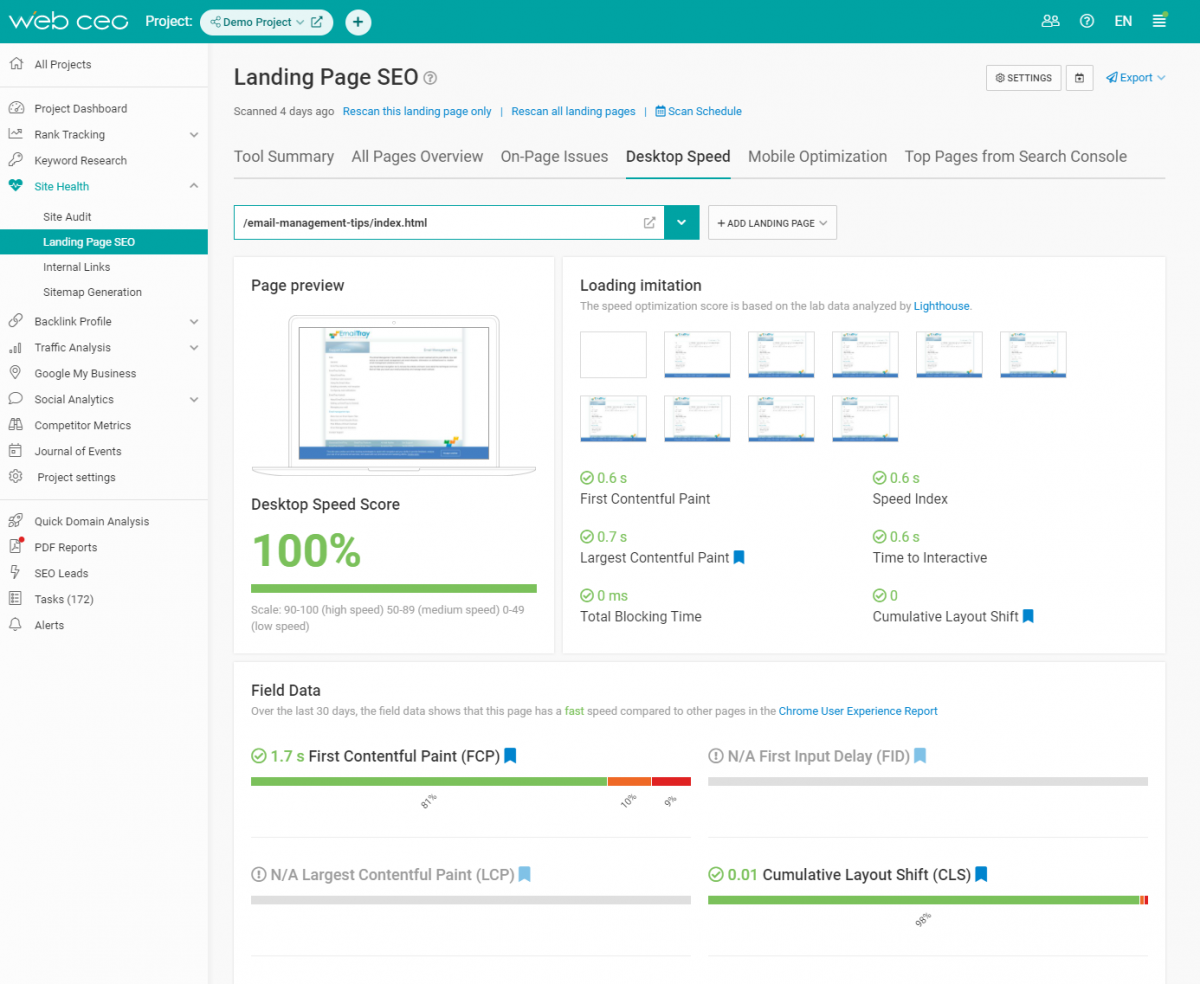

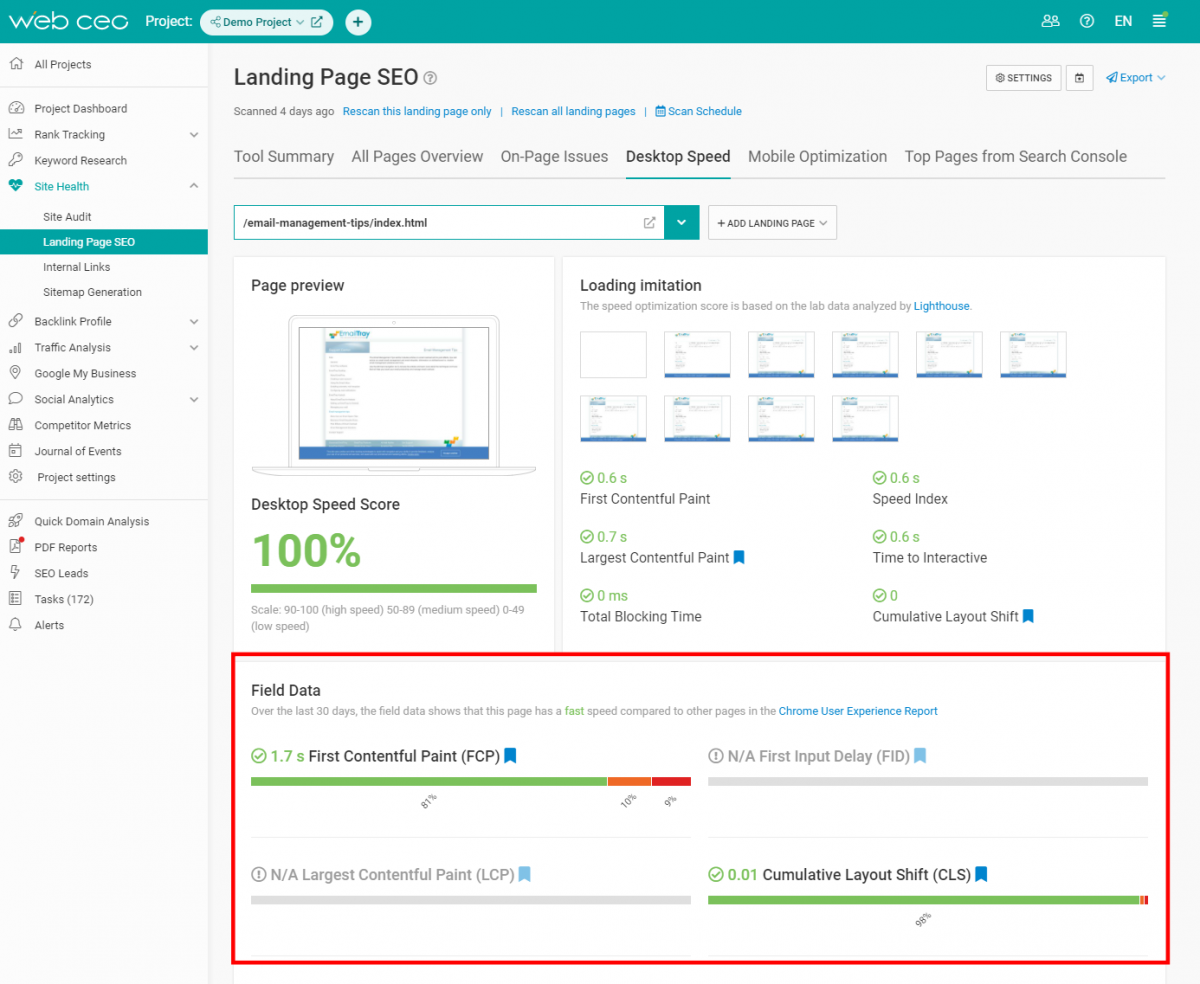

6. Get Acquainted with a New Ranking Signal: Core Web Vitals

Core Web Vitals has caused a lot of noise recently. They are focused on evaluating the quality of the user experience on a website. There are three factors by which a search engine will analyze your projects:

- Largest Contentful Paint: how long the toughest element on your page is loaded;

- First Input Delay: how long a user waits for a response after acting on the website;

- Cumulative Layout Shift: analyzes the behavior of specific elements on the page and their unexpected shifts that damage the user experience.

WebCEO also joined the discussion and wrote a few words on Core Web Vitals with advice on how to maintain your website according to the new rules. Our platform is also capable of telling you whether everything is okay with the website or whether you should consider a revision:

7. Get Rid of Broken Links and Pages

Internal links are a reliable guide through the content on a website. If there is a forced stop in navigation, a user will leave and may not return. This also works with broken pages.

Discover broken links and pages with the WebCEO Site Audit Tool and get proven and helpful advice on how to solve this problem.

Fix your issues and let your readers be happy with the content you create.

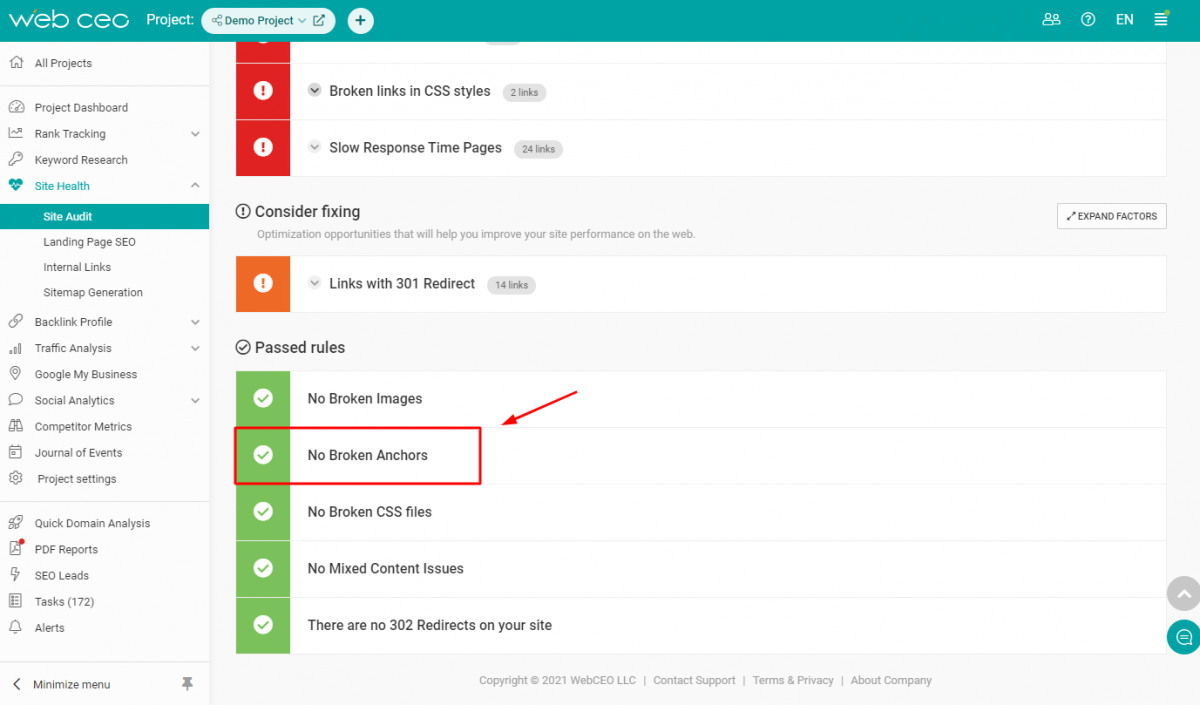

8. Describe your links if there are any missing anchors

Anchors are an important signal for crawlers to understand the content of the page you are referring to. They are tips for a user about what they will find on a page.

Check and optimize your anchors (in case you did them wrong) with the WebCEO Site Audit Tool. You will see what pages and links miss anchor texts and get advice on their optimization.

10. Improve the User Experience

User experience is one of the most important ranking factors for Google. The way people feel on the pages of the website greatly affects your position in the SERPs. There are some critical aspects you have to consider to state that the website is really user-friendly:

- Design

We live in an era of visuals. If you don’t follow the trend you will most likely be neglected. People like it when a picture in front of their eyes is pleasing and modern, especially when everybody does their best to impress a visitor. Don’t mess up where your competitors succeed.

This even adds some reliability to the whole product.

Don’t forget that your visual concept should comply with your niche. Don’t have it so bright it hurts people’s eyes. Instead, make it comfortable enough to nurture a desire to get more useful information about your business.

- Ads and Pop-ups

Ads on a website may be extremely disturbing. Especially pop-ups which emerge on the page when no one needs and expects them. That’s especially uncomfortable when a user comes with a strong appetite for your content but he or she has to fight with advertising.

If you want to get ⅔ of your page covered with advertisements, your website has to be highly authoritative so that people will never think of leaving it. Otherwise, seeing a blinking picture of dozens of ads, a visitor will definitely leave to find a more comfortable environment.

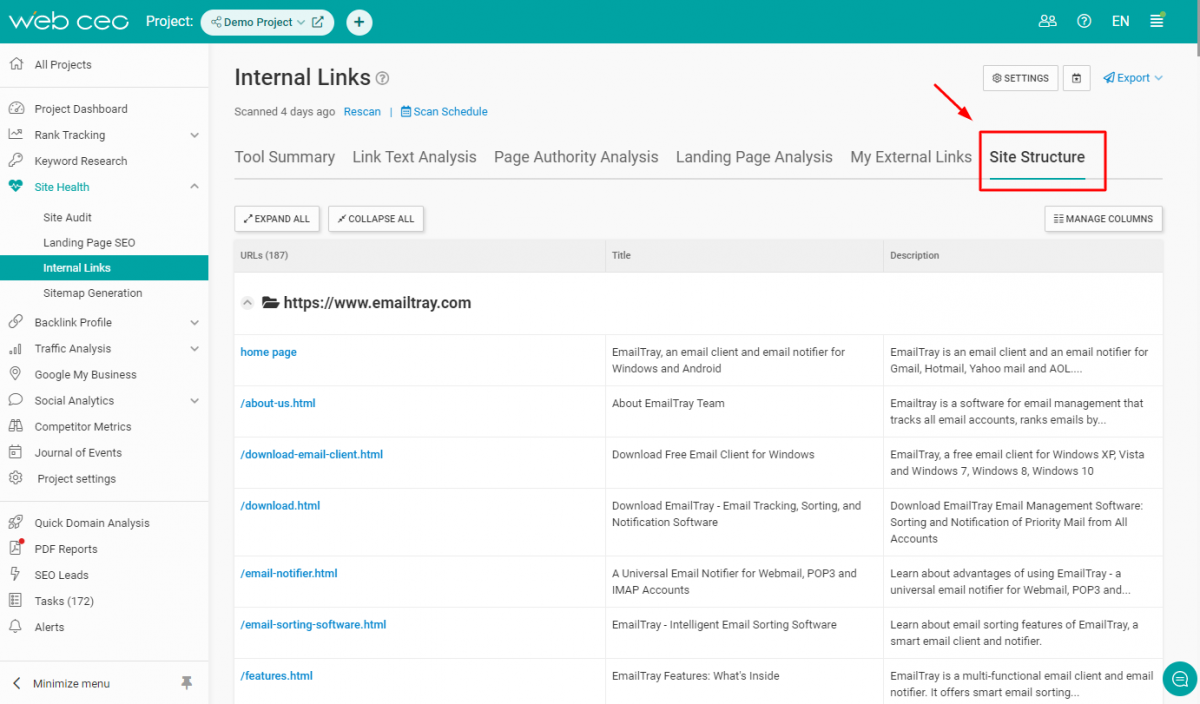

- Structure and navigation

Think adequately about the website’s “tree” and rehearse the way a user would climb each of its “branches”. Structure and navigation are two important components for people to travel through your website. If they can’t intuitively understand where the content they seek is located, you are in trouble.

There are few Internet experiences worse than getting lost in thousands of pages. This will hurt your project as well: people will visit lots of pages and leave them as quickly as possible. Not a great scenario for your bounce rate, right?

Create a clear and simple website layout with easy-to-notice navigation and easy-to-get titles for each section. You can even draw it with a pen and a piece of paper. Ask someone to critique your efforts. If there are any problematic moments, fix them and present a new version. It’s just as easy and won’t take too much of your time. Check the site structure with the WebCEO Internal Links Tool and get a comprehensive map of the website.

Breadcrumbs should be on any website! Especially if you run a website with thousands of categories and subcategories, such an e-commerce website. People like to understand where they are, how they have reached this point, and how to go back to a previous step or two steps earlier without clicking on the “back” button.

- CTAs

It’s common practice to place a CTA that leads to irrelevant, spammy, or advertising pages. Google and users don’t like it, believe me.

A little note: CTAs are not a new way to invite a user to specific pages and sometimes their bright forms trigger irritation instead of the desired click.

Give some relief to your users’ eyes and work on a cool presentation of your CTA, be it a text link, a button, or a banner. Prepare catchy and interesting titles and descriptions of what a user will get if he or she clicks on your CTA. This will work out better than just bright colors which can hurt somebody’s eyes.

Remember that CTAs work just as a final push for a user. Before it works, you should warm him or her up with your content! So, in the beginning, you should work on “warm up” texts and then put your soul into appropriate CTAs.

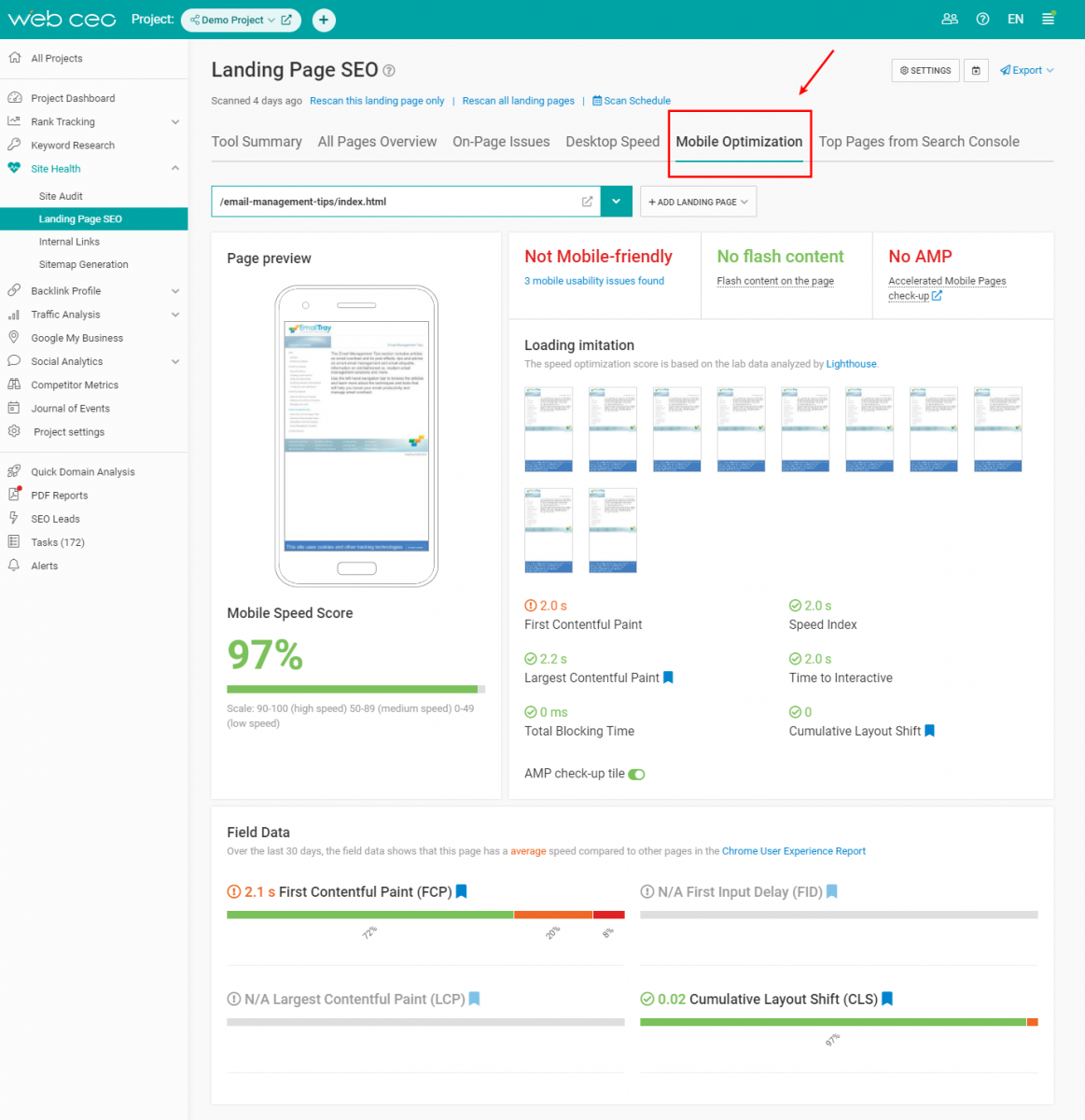

- Mobile Friendliness

We live in a mobile era. It’s neither a secret nor a discovery. If a website isn’t ready to be presented on mobile screens and tablets you will lose the SEO game. Google has explicitly stated that the mobile version of websites takes priority during indexing and this change was implemented in July 2019.

Make sure to make your websites responsive if you haven’t done this yet.

Since people prefer investigating websites via their smartphones you have to make your content comfortable to read and work with on small screens so people will not throw their gadgets against the wall! This concerns everything: texts, images, navigation bar, forms for completion, buttons, search, filters, sorting and so many more mobile details to take care of.

Via the WebCEO Mobile Friendliness Tool, you can evaluate whether your websites are suitable for the mobile world.

- Security

Security certificates have been in great need for a long time. If the website you are working on has a “Not Secure” badge in the address box there are high chances you will lose some of your new readers because everybody is concerned about their personal data.

Readers’ trust is one of the most important factors in the overall success of any business. If they are sure you will have their personal data under lock and key, they will equally unhesitantly visit your place.

Google praises secure websites over those that are not secure. Website security has long been a ranking factor. Use only trustworthy hosting providers that will maintain an SSL certificate for your website if your goal is to get higher in SERPs.

Security should be kept not only for visitors but also for webmasters. People who work on the website from each possible perspective have to keep everything under their control:

– Be careful with access and rights: give the power to implement big changes only to a limited number of people. You have to be sure that they will not steal or use any of the inside scoops.

– Change passwords and update plugins and other software you use (including desktop antiviruses) on time. Long-term passwords are a bad decision. Eventually, they will be “found” and you will suffer. Make sure you protect your projects on all sides.

– Have backups of your projects. If it happens (we hope not) that one of the projects you are working on is attacked and all the data is erased, you will restore everything much faster with ready copies. You must admit that this is better than creating everything from scratch.

- Redirects

When we were discussing the canonical tag, a 301 redirect was mentioned in passing. Let’s discuss this topic in more detail.

For SEO purposes, there are two main types of redirects: 301 (a permanent redirect) and 302 (or a temporary redirect).

301 redirects serve for eternity as you have already understood. This type of redirect will transfer all the gained authority of an old URL to a new URL (without any loss of PageRank) and will direct readers to another URL until the end of the time.

In general, there are four situations when a 301 redirect can be used:

- to move a website to a new domain with no sweat,

- to denote a page as a main source of traffic in case there are several versions of it (exactly the canonical tag situation),

- to change the final destination of a link;

- to merge two websites.

What is important to remember when you face the 301 redirect:

– remove pages with the 301 redirect from the sitemap and leave only the new page that got the redirect links to: the very existence of the 301 redirect means you would like to hide the old page from users and Google, thus there is no need to include it in the sitemap; over time you will want to replace as many redirects as possible with the proper direct links

– check if you have multiple redirects and fix this problem: multiple redirects in terms of one page slow things down, so just point out the final destination;

– don’t practice the “step back” redirection: don’t put a redirect to the previous page creating a chain of redirects; if the situation changes and you have to bring the past page back, remove needless redirects carefully so as to not confuse Google;

– always monitor the condition of your website and how your redirects work – if there are any issues, fix broken ones if they appear to be as soon as possible because this will otherwise significantly hurt the user experience;

– monitor the pages you have set the 301 redirect from: check if they receive any traffic. If they do, go to Google Search Console and request a recrawl.

– be careful with low-quality redirects: there are situations when a website’s domain expires and it is taken by a malicious website to use it for lucre. All backlinks that earlier led to relevant websites may then change their direction to toxic ones. This can cause a lot of unpleasant outcomes.

With the 302 redirect we have another story to tell.

If you want to hide a page from readers for a while and make them go to another one instead, the 302 redirect is a perfect decision. Remember, that it works only for a limited time. Its main problem is that no juice will be transferred from the hidden URL to the new one as it otherwise go with a 301 redirect.

With a 302 redirect you will only change the destination for traffic. Use it when you need to hold some A/B testing, start a special offer, or do other similar things.

While there are situations when webmasters set temporary redirects instead of permanent ones, be sure to end each use of a 302 redirect within a short period of time so Google can determine where the link should really go toward.

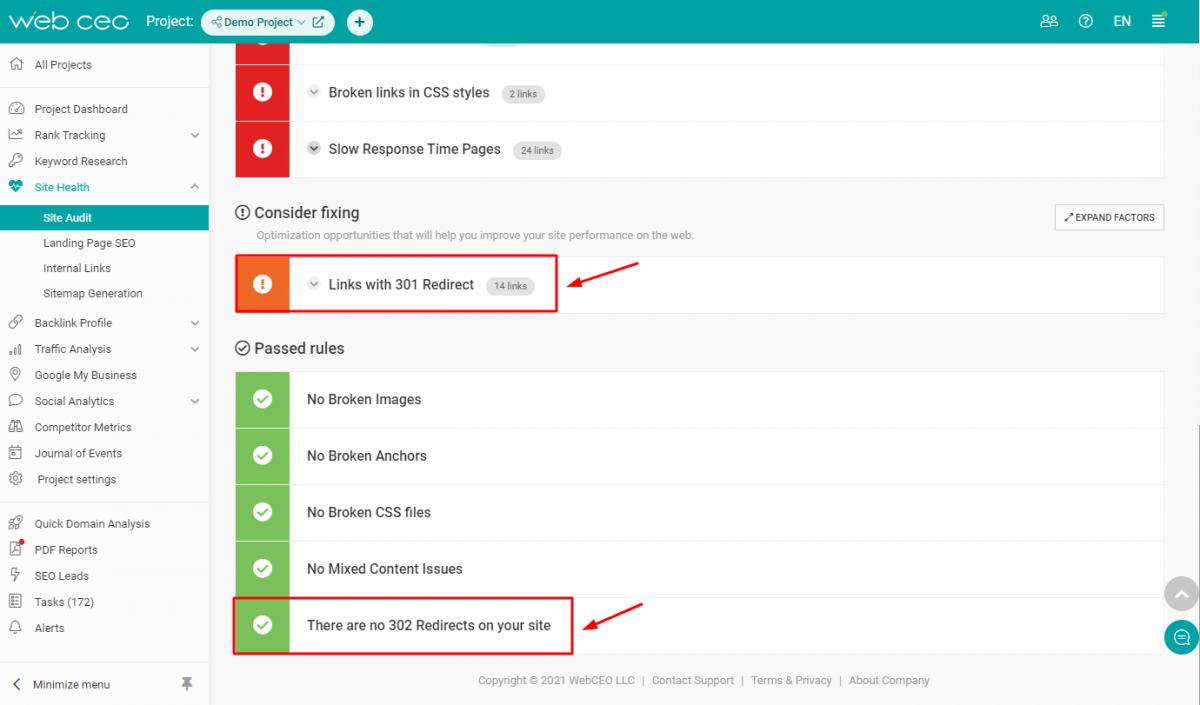

Use the WebCEO Site Audit Tool to check your website for redirect issues.

- Internal links

Internal links are also one of the more important factors from a technical and an on-page SEO point of view.

Internal links serve Google as an irreplaceable tool for investigation. Like backlinks, they help Google identify and index your pages. On the other hand, by using them, you also build a way for users to travel across any website with no stops and learn more about your content or products.

There are a few things to keep in mind when you work with internal links:

- Make all of your internal links dofollow – they should transfer juice from one page to another to gain strong positions in search results;

- Be careful with the nofollow attribute and check twice if it’s needed: it doesn’t give any juice to the page you are linking to. Thus, try not to deprive strength to your own website’s pages. A lot of authoritative websites put nofollow attributes only on external links.

- Prevent orphan pages from appearing: nofollow links can cause orphan pages as they create obstacles for crawling;

- Make your internal links work: this concerns not just technical SEO but better navigation for your audience. Anchor texts also play a significant role – with correct anchor texts Google and people understand what the page referred to will be about.

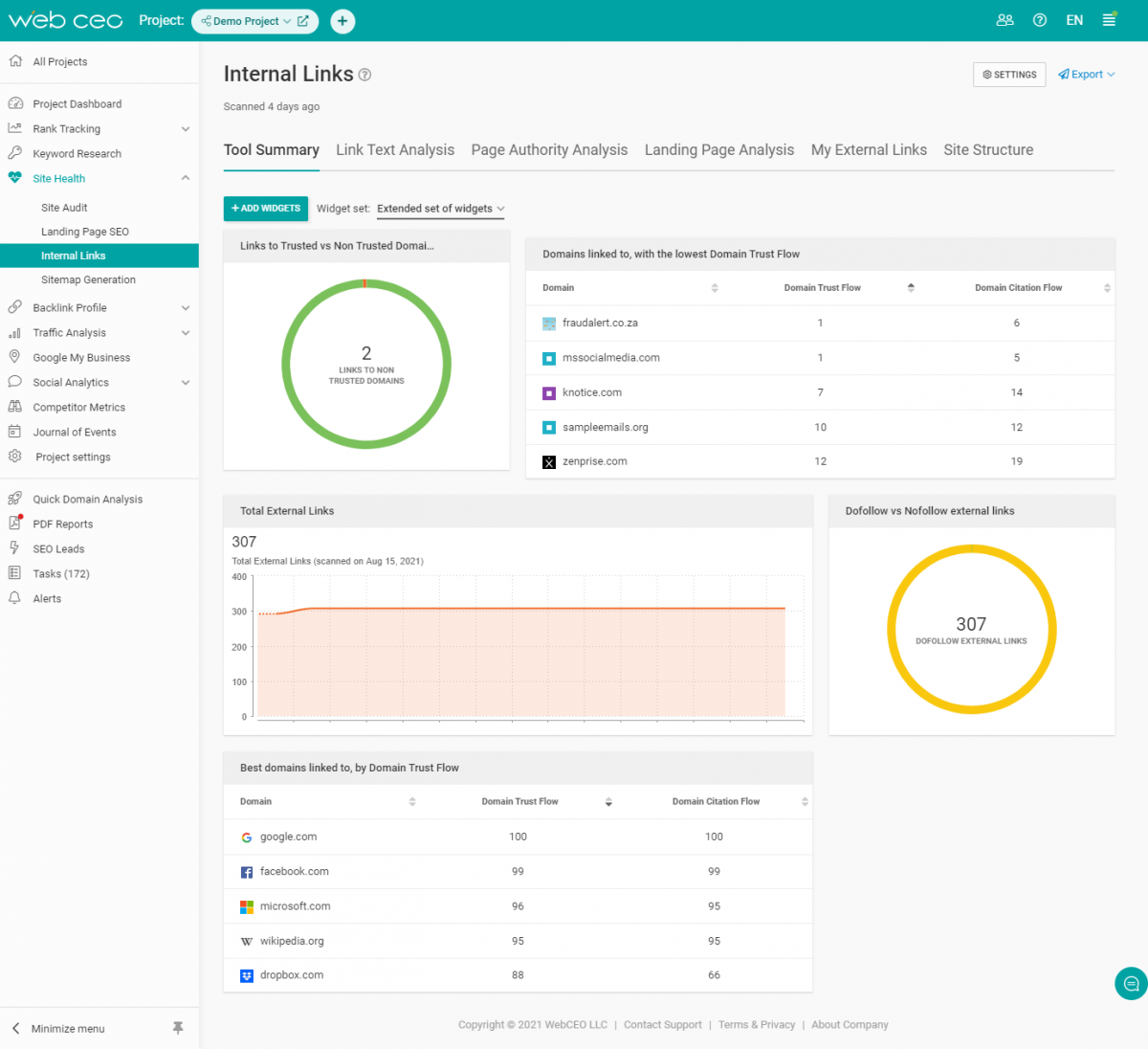

The WebCEO Internal Links Tool will help you check all of your internal links, their anchor texts and status for you to understand how juice is distributed across a website’s pages.

- Error Pages

Error pages are what nobody wants to see once they enter a website.

Currently, error pages don’t affect rankings in any way. However, for proper management of these pages, you have to meet some requirements: they shouldn’t be included in the robots.txt file nor tagged with a noindex meta tag. Google should see these pages during crawling and indexing to understand that they are no longer available and such URLs no longer exist.

Nevertheless, even if Google doesn’t consider error pages as bad practice, they are so in terms of user experience! Since user behavior is an important ranking factor, you still should work on error pages and sort everything out.

Let’s discuss what’s better to do if you have error pages:

- Make your error pages attractive and helpful.

Each time we land on an error page, our first desire is to leave because we didn’t find what we needed. That’s a bad scenario for any webmaster and SEOer. To avoid this situation, decorate error pages according to your corporate style and give visitors some relevant information.

A lot of companies use creative approaches to make their 404 pages look entertaining. Take notes and follow great examples! It’s great if the appearance of 404 pages matches the general style of the website.

On a 404 page, provide a list of links to other pages or articles on your website with information that they may have been looking for. You’d better avoid putting a link to the home page because the information there is pretty basic and Google doesn’t appreciate this practice. Give sources of useful data and visitors will be more than happy about this.

The WebCEO Site Audit Tool will help you find all the error pages on your website.

2. Identify if there are any soft 404 pages on your website and fix them.

Generally speaking, a soft 404 page is an error page with a “200” success status code instead of 404/410/301 because of a badly configured server.

You can easily identify if you have any soft 404 pages with paid SEO audit tools like ahrefs or with the help of Google Search Console.

Enter your Google Search Console account and open the “Coverage” report. There you will see if there are any issues with the validation of your pages. In the “Details” section a line “Submitted URL seems to be a Soft 404” will be shown.

You can also use the URL Inspection Tool in Google Search Console. You just need to enter the URL you want to check and you will get all the current information from the Google index about this page.

If the page is a soft 404 with an accidental 200 success code from your server, ask your developer to configure this problem. If you have no intention of filling this page with new content, leave it as it is. As it is said in the Google Webmaster Guidelines, 404 pages will not be shown in the Google Search Console’s Coverage report after about a month.

Why is it important to consider fixing soft 404 pages?

They are misleading Google. Because they have a 200 success status code, soft 404 pages tell Google that they are okay and can be indexed, hence shown in the search engine result pages. People will see pages that have no content. That’s bad.

In addition, Google wastes the crawling budget on such pages. This is a waste of Google resources, whereas it strives to crawl the right pages only. Properly manage factors that are under your responsibility. This will play into your hands.

If you want to resolve a soft 404 problem but save the page from being eliminated by Google, because of gained juice, there is a way. You can save its achievements by applying the noindex meta tag to this page. The page will not be seen by Google nor appear in search results but will keep its juice.

3. Add a redirect if you have relevant content.

If you have moved your content or deleted it, you can always set up a 301 redirect to not interrupt a user’s journey on the website. He or she will barely notice that you have changed something (as long as redirection happens fast), but Google will see that the way to the page has changed.

The content you want to link to should be relevant to what was previously linked to.

- Orphan and Dead-end pages

Error pages are not the only problem you can encounter while conducting a technical investigation of your projects.

Orphan and dead-end pages are also a matter of concern for any webmaster or SEOer. They are disadvantageous both for Google and for webmasters.

Let’s have them explained:

Orphan pages are pages that have no access from any other page of a website. In simple words, it is a page with no entry or exit. Some time ago they were popular for marketing goals because they were designed to keep a visitor on a specific page as long as possible. However, times change.

Why are orphan pages bad?

- They waste Google’s crawl budget.

- Such pages are problematic because without links pointing to them it’s hard to differentiate them from other pages of the website and understand if they are relevant to other pages.

- They don’t get any authority or juice as it happens when one page leads straight to another and creates a chain for authority transfer.

- People can find these pages only if they have a link to them, which means low traffic.

- Even if an orphan page is found, it’s a real ordeal for a user to be imprisoned inside of one page. We all like to travel through various links to see the original sources or read additional material. These pages are damaging to the user experience.

If you have orphan pages on a website, fix them and make them a useful and helpful part of the site.

Dead-end pages are pages that don’t link to any other internal or external resources. They are linked to by other pages, but the authority transfer stops on this page.

This is bad because Google doesn’t see a way further to continue crawling and transferring juice and authority. Visitors don’t see a way forward except for the Back button. That hurts the user experience. You’d better avoid dead-end pages or eliminate them.

- Sitemap

A sitemap is not a one hundred percent necessary thing. Google doesn’t require you to submit one, but its value is still high. Sitemaps help Google understand how a website is structured and what pages it contains a lot faster and easier than if the search engine investigates it independently.

So, helping Google will also help you.

First, we should learn about the sitemap features every SEOer or webmaster should consider:

- Sitemaps are not infinite in their size: you can include a maximum of 50k URLs. The maximum size of a single sitemap should be 50 Mb. If the website is pretty big and you can’t get into these parameters, don’t panic. You can create several sitemaps and submit them to Google Search Console. Just don’t try to keep everything within one sitemap;

To reduce the size of a sitemap, you can format it from XML into xml.gz. A compressed file will be smaller and the search engine will process it faster.

- A sitemap should contain URLs that you want to prioritize. For instance, if you have non-www and www, HTTPS, and HTTP versions of one URL, you should include only one of them which you want to make the chief one. Most often this should be the URL you had marked with the rel=”canonical” tag in your website’s HTML code. Thus, Google will understand what pages you want to make the foreground and where to transfer traffic;

- Don’t place URLs which you have put in the robots.txt file or applied a noindex tag into a sitemap. You will confuse Google. This will provoke conflict because Google will not understand what exactly to do with the listed information.

- Some pages shouldn’t be in sitemaps:

- soft 404s,

- 401 pages protected with a password,

- pages with a permanent redirect: in this case you have to include the final destination page,

- non-canonical pages: include only those that had the rel=”canonical” tag in your code,

- pages from A/B testing: leave an original page (the one designated with the rel=”canonical” tag in your code) instead of the testing URLs.

- If your website has a lot of media materials such as videos or images, or news articles that you present in the news section, consider creating separate sitemaps for such types of content. They will contain additional information about your content that will help Google understand your content better. In some cases, such sitemaps will help discover images or videos that Google can’t find itself.

- Dynamic sitemaps are gaining more popularity these days. Their main advantage is that you don’t need to create and submit a new sitemap after any changes you have made to your website’s structure or content. A dynamic sitemap will draw any changes automatically. The information about your website will always be up to date.

Such sitemaps are fast and don’t require a lot of resources. The easiest way to create one is via a good CMS program. If your website doesn’t operate on one of them you will have to ask your developers to create a dynamic sitemap using code.

A little while ago, Google released a series of educational videos about Google Search Console. There is one about sitemaps. These can be interesting and make things more clear for you.

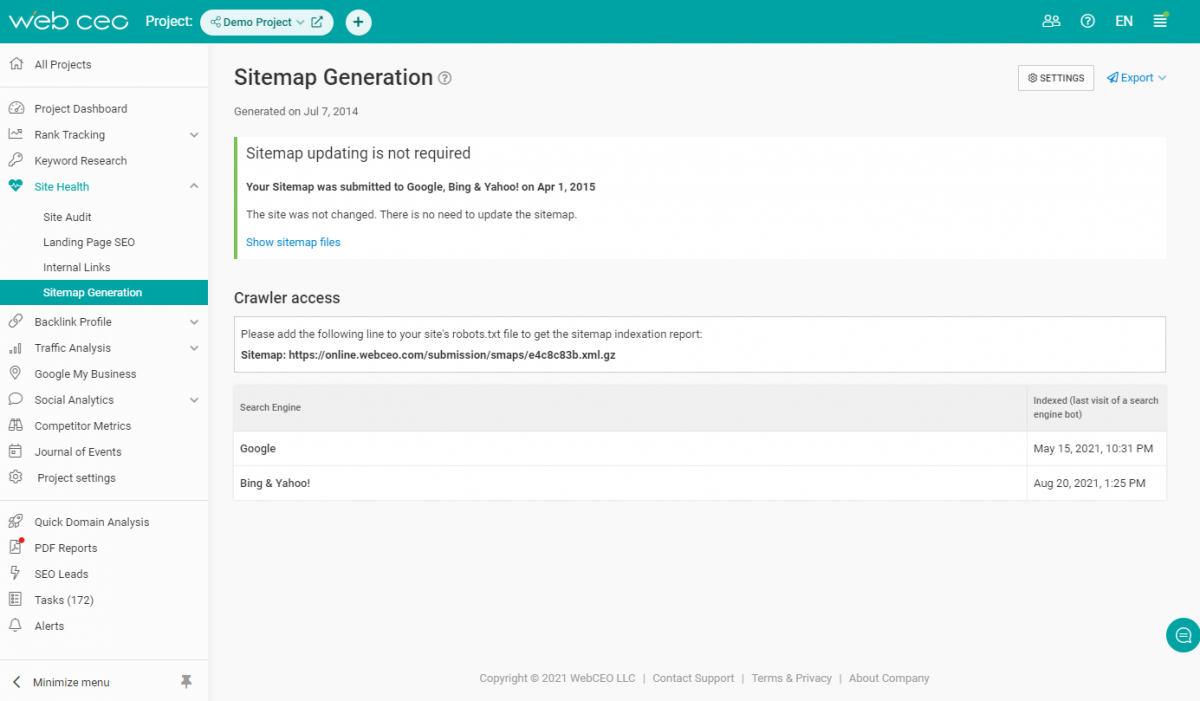

If you have trouble creating and submitting a sitemap, the WebCEO Sitemap Generation Tool will help you to create one.

- .htaccess file

This part of the article will be interesting and transparent to those who work with an Apache webserver or know how to deal with such. These webservers work with .htaccess files that help webmasters, or those who have access to them, configure the server settings and control things.

Via this file, you can complete some things we talked about during this article. So, a webmaster can easily:

- Add redirects, change domain names or the whole site’s organization;

- Load custom error pages instead of default ones that coincide with a website’s corporate appearance (faster than creating everything from scratch);

- Transfer a website from HTTP to HTTPS protocol;

- Protect certain directories within the server with passwords to protect data from people with limited access;

You can also do a lot of other useful things. If you haven’t dealt with the .htaccess file but have an opportunity to – give it a try. Even if you don’t use it in the future, the knowledge is worth it.

FINAL STEP: Always monitor results and make changes when necessary.

We recommend results monitoring via tools we have already mentioned earlier, such as Google Search Console.

We also have to mention Google Analytics which is an important tool to get deeper into the minds of your visitors. We will discuss this a little later, so stay tuned.

You can also make your life a lot easier by using the WebCEO SEO Tools. You will be able to automate the whole process of optimization down to the smallest detail. For instance, you can schedule scans for every tool. The results will come by email in the form of a PDF report.

To be instantly informed about any issues with your projects, you can use the WebCEO Alert Tool. This will get you alerted by email about any problems right away so you can fix them without delay.

Moreover, WebCEO has integrated with all the most important Google tools as you have already seen. Instead of jumping from one instrument to another, you will have everything within one platform.

If you work on a team and with a large number of projects simultaneously there is a helpful automation feature inside the WebCEO service. You can group your projects and set up specific scan conditions and schedules for every group. Thus, your team members will have their work systematized and properly managed in terms of deadlines. This is a great and easy opportunity to conduct butch analysis and optimization.

CONCLUSION

A very technical SEO audit won’t take too much of your time, especially if you work with SEO tools. Resolving the issues you find might take a lot more time. It’s meant to be this way. Don’t waste time doing low-quality work.

Good luck and make your projects first-rate with the WebCEO SEO Tools!

READ MORE: